The Knowledge Web Problem

There is a huge problem rapidly approaching on the horizon of data society: the authenticity and custodianship of the Knowledge Web. This has been especially highlighted by the ever expanding AI data web crawling and soon to be encountered 'unravelling' of AI content as it devours its own output to train its New Model Algorithms (NMAs).

Think for a moment - I write a short blogpost using AI and publish it to my blog on 'science and the environment'. ChatGPT_n produces fabulous content I can pump out in my socmed channels and RSS feed. Saves time, can be automated, really useful! Along comes a web crawler doing a LLM training trawl (think massive fishing trawler with those huge 'catchall' nets that were later banned cos they caught too many small fish), and it scoops up my AI-produced-article. The algorithm training design (ATD) uses the general metadata to categorise and list the article: environment, science, blog, author-name (me), pub-date, sub-topics etc etc. It is therefore listed as being written by me - let's say a scientist with a PhD - and is considered a serious knowledge contribution. The ATD may rate the quality of the content according to the author name and type of content with a 4 or even 5 star rating. It may have additional ratings of amount of times shared or accessed (via web analytics data) - implying that it is considered useful by the relevant population (search results already work like this). These potential value rating mechanisms (my descriptions of what is likely happening) may impact how and how much it is used in ChatGPT_n outputs.

But! This is a conflation of AI results churn, using keywords and other semantically associated textual content. It's not real. In some cases it might be fairly accurate churn, in other cases it might be entirely fictitious combobulation. And as ATDs begin to train themselves on previously AI generated content, a kind of primordial soup of information emerges. This is a very approximate description of the unravelling of the Knowledge Web. I believe this is the number one problem of embracing AI content generators in the public sphere, with zero tolerance in the web crawl system to adapt for this problem.

Currently it may appear that there is some separation between 'proper academic content' (Scopus databases, for example) and everything else on the web, but it's generally a false assurance, as millions of paywalled academic papers and books are already in the wild. Add to that the many open access papers, the open educational resources, the blogs and websites with high quality knowledge and information, and you have essentially built a completely un-policeable system.

Don't expect me to have answers - this question is as yet unaddressed and unanswerable. Katz & Gandel warned us back in 2008, about the challenges of The Tower, The Cloud and Posterity. They referred to the current digital information age as Archivy 4.0, and highlighted issues of authenticity, versioning records, custodianship responsibilities and intellectual property. Their work stands today as a stark reminder of not paying attention, dismissing what seem like obscure or fringe ideas and just thinking we all know 'better'. This is exactly what is happening now, with the advent of AI tools in the public sphere. Scifi tropes about impending robot apocalypse, or the opposite 'let go of your fear and believe!', or simple vague acceptance of time saving, ease of use, convenience, all normalising this weird and wonderful ability to get human-voiced search results that seem so ordinary. Each single input/output interaction seems so harmless, so futuristic, how can it possibly be a bad thing?

Too many people in the AI discussions have next to no knowledge of what is involved. I count myself among them, and yet I also feel I have a far greater grasp of the technical aspects and implications (which after all are the most important, at this point in time), so feel exasperated at the sheer level of simplistic ignorance being exhibited in the academic community. "It's just like the printing press!", (no, it really, really isn't) , "it's not sentient, what damage can it do?" (sentience isn't even close to be an issue at this point in time), "it will open the door to much better working lives and quality of work" (this one really is dumbfoundingly idealistic), and so on. Academic concern seems mainly focused on implications for assessment, as the persistent view of students is that they are all lazy and want to cheat by any means available. As this is discussed variously elsewhere I won't dig into it here, but it says more about your teaching and what you are like as a lecturer than it does about the students if this is your perception of the student body.

The real problem of student work and AI generated knowledge content is the unravelling of the knowledge web. No one is really talking about this much as yet but I did spot an article about it - and there may be others. I suspect people like Jonathan Zittrain or danah boyd are already thinking hard about this. It's the classic wicked and tangled challenge, the more you try to sort one aspect, the more other aspects become worse.

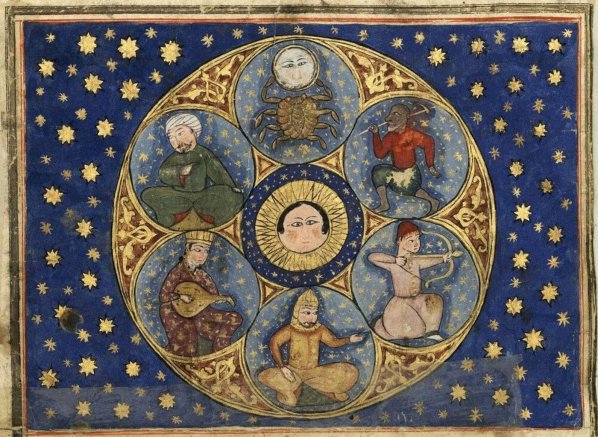

Image: taken from The Cosmic Dance, by Stephen Ellcock, ~"a panglobal collection of remarkable, arresting, and surprising images drawn from the entire history of art to explore the ancient belief that the cosmos is reflected in all living things."